I'm a Staff Mixed-Methods UX Researcher who builds research programs from scratch and pushes teams to ask harder questions. AI-native, R & SQL-fluent, and promoted twice in three years at Meta for doing high-leverage research at speed.

Research Experience

Meta | UX Researcher

Reality Labs — June 2022 - Present

Facebook Marketplace; Trust & Safety — August 2022 - June 2022

Facebook | UX Research Intern

Video Integrity — June 2021 - September 2021

Northwestern University | Cognitive Psychology Researcher

Reading Comprehension Lab — September 2017 - June 2022

Technical Skills

Survey Design

Experimental Design & A/B Testing

Data Manipulation & Analysis (R, SQL)

Statistical Analysis (Regressions, t-tests, ANOVA, Causal Modeling, Mixed Effect Modeling)

Data Visualization (ggplot, Tableau)

Participant Sampling

User Interviews and Focus Groups

Longitudinal Research and Diary Studies

Vendor Management

Workshop Organization

Select Projects

These case studies show my range across:

Large-scale quantitative studies (e.g., segmentation in Case Study 1)

Program/infrastructure building (e.g., quality measurement in Case Study 2)

Mixed-methods integration (e.g., content quality/trust Case Study 3)

Both generative research (surfacing new insights) and evaluative research (measuring impact) across all 3 studies

Case Study 1: Understanding Quest Gamers - A New Segmentation Framework

The Challenge

Meta's VR gaming strategy needed a more nuanced understanding of who plays games in VR and why. Existing frameworks didn't capture the unique behaviors and motivations of VR gamers, making it difficult to prioritize content development, guide marketing strategies, or personalize user experiences.

My Role

Research Lead - I designed and executed this foundational segmentation study in partnership with Data Science, presented findings at Meta Connect 2025, and published an external blog post to share insights with the developer community (which they loved!).

Approach

I designed a large-scale quantitative study surveying thousands of users about their gaming behaviors, preferences, and motivations. The methodology combined:

Survey design: Created instruments measuring play frequency, genre preferences, social behaviors, and motivational drivers

Cluster analysis: Used R for dimensionality reduction, hierarchical clustering based on segmentation variables, and summarization of profiling variables

Behavioral validation: Linked survey responses to on-platform usage data to validate segments reflected real-world behavior

Key Insights

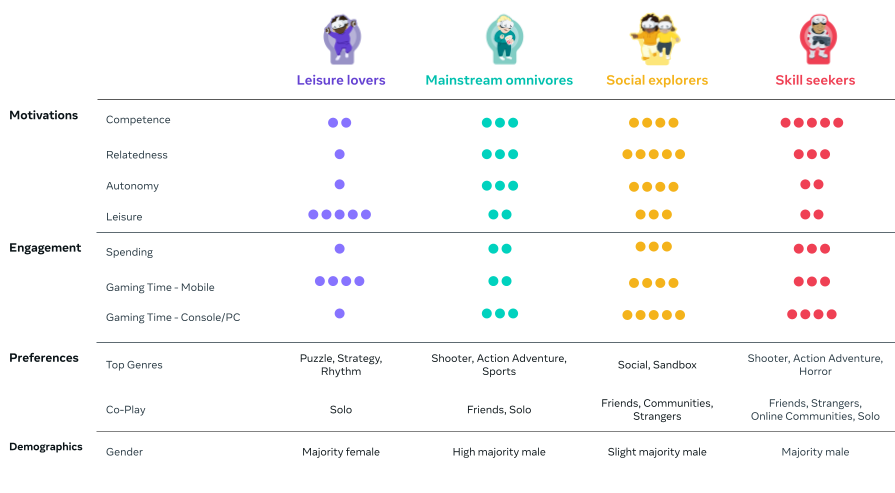

We identified four distinct gamer segments with fundamentally different needs:

Casual Gamers (“Leisure Lovers”) wanted quick, accessible experiences they could pick up without a learning curve

Social Gamers (“Social Explorers”) were motivated by connection and shared experiences with friends and family

Mainstream Gamers (“Mainstream Omnivores”) sought engaging gameplay without the complexity or time commitment of hardcore titles

Core Gamers (“Skill Seekers”) wanted deeper, longer experiences and were willing to invest time mastering complex mechanics

The surprise? The priorities and growth patterns of each segment were very different from leadership assumptions. Mainstream Gamers—not Core Gamers—represented the biggest opportunity for growth, which fundamentally shifted how we thought about content investment and hardware positioning. Features that Core Gamers considered essential (high-fidelity graphics, complex controls) were actually barriers for Mainstream and Casual players.

Impact

Redefined product positioning and target audience

Redirected content investment toward high-growth segments

Enabled targeted ranking algorithms and differentiated experimentation between gamer types

Equipped 1P and 3P developers with actionable audience insights and design recommendations

Informed marketing strategies and campaign messaging

Read the full external blog post →

Watch me present live at Meta Connect 2025 →

Methods Showcase

This project demonstrates:

Large-scale quantitative research design: Created survey instruments measuring complex behavioral and motivational constructs across thousands of users

Advanced statistical analysis (in R): Conducted dimensionality reduction, cluster analysis, modeling to identify statistically distinct segments

Behavioral validation: Self-served on data, using SQL to link survey responses to on-platform usage patterns, ensuring segments reflected real-world behavior not just stated preferences

Cross-functional collaboration: Worked with Data Science, Product, Marketing, and Content teams to translate academic segmentation into actionable business strategy and redirect product/content investment decisions

External communication: Distilled complex findings into accessible narratives for both internal leadership and external developers

Case Study 2: Building a Quality Measurement Program for Critical User Journeys

The Challenge

VR products were shipping with hundreds of usability issues that only surfaced after launch, when they were expensive to fix and already impacting user experience. Features were tested in isolation, but there was no systematic way to measure end-to-end product quality before it reached users.

My Role

Program Lead - I identified this gap, proposed and built the measurement framework from scratch, and scaled it across multiple product organizations.

Approach

I designed a cross-functional quality testing program that combined:

Task-based usability testing: Operationalized product vision by translating critical user flows into core usability tasks

Integrated scorecards: Partnered with QA and Data Science to triangulate signals — combining usability findings, functional bugs, and behavioral metrics into holistic reports

Dynamic dashboards: Built tracking systems to monitor quality trends over time and triage issues by severity

Embedded quality gates: Worked with leadership to establish usability readiness requirements as a launch criterion

Key Insights

The program surfaced a critical finding: Teams were optimizing for functionality of individual features but missing systematic usability problems that only emerged when testing the full experience of, for example, finding and watching a movie in VR. Even more, users were abandoning key features not because they didn't work, but because they couldn't figure out how to use them in context.

Impact

Identified 500+ functional and usability issues across critical user flows

Closed ~150 high-priority fixes before launch

Achieved measurable quality improvements in BT pairing success, video quality, and feature failure rates

Saved $1M+ by internalizing vendor work and creating reusable frameworks

Standardized quality measurement across multiple product workstreams (design audits, localization, dogfooding programs)

Methods Showcase

This project demonstrates my ability to:

Build research infrastructure and measurement frameworks at scale

Combine qualitative usability insights with quantitative behavioral metrics

Work cross-functionally to embed research into product development processes

Self-serve on data (wrote SQL queries for recruitment, leveraged dashboards for instant metrics)

Case Study 3: When Engagement Metrics Miss the Real Story - Measuring Content Quality & Trust

The Challenge

Certain video content was driving high engagement metrics but user feedback suggested something was off. Leadership needed to understand whether this content was actually good for the platform long-term.

My Role

Lead Researcher - I designed and executed a mixed-methods study examining how low-quality content affected user trust and platform perception.

Approach

I combined multiple methods to get a complete picture:

Behavioral analysis: Used SQL to analyze watch patterns, completion rates, and post-viewing behavior

Survey research: Designed on-platform surveys measuring user attitudes toward different content types

Integrated insights: Partnered with Data Science to combine behavioral data with self-reported sentiment

Key Insights

The data revealed a critical misalignment: cCntent that performed well on engagement metrics (watch time, clicks) actually decreased user trust in the platform. People were watching because titles were sensationalized, but they felt worse about the platform afterward.

Impact

Shifted product strategy from pure engagement optimization to "meaningful video views"

Created new metrics combining behavioral signals with meaningfulness ratings

Influenced enforcement policy changes - moving from punitive to educational interventions

Changed how the team thought about content quality measurement

Methods Showcase

This project demonstrates my skillset in:

Mixed-methods integration (behavioral + survey data)

Translating research into new metric frameworks

Influencing strategic decisions when research contradicts topline metrics

Testimonials

"Nikita's ability to integrate qualitative and quantitative insights is truly exceptional. She doesn't just surface user pain points—she uncovers underlying behavioral patterns, identifies practical solutions, and ensures the user voice is a driving force in shaping product strategy. She excels at bridging the gap between data and decision-making, helping teams align on key priorities that truly enhance user experiences.

Her analytical rigor is matched by her clarity in communication and storytelling. Whether synthesizing complex research findings, guiding cross-functional teams through key insights, or influencing stakeholders, she has a remarkable ability to translate data into compelling narratives that drive alignment and impact.

She works seamlessly across product, design, engineering, and business teams, ensuring that research is not just informative but also actionable and deeply embedded in product decisions. Her ability to build strong relationships and advocate for the user ensures that every feature and experience is developed with real user needs in mind."

— JUSTIN STRAUB, AI Product Leader @ Adobe | Former Meta Product Leader

“I had the wonderful experience of working closely with Nikita, one of the strongest UX researchers I've had the pleasure of working with at Meta; I know any team would be successful with her expertise and strengths. She set the team up for success through her strengths including strong collaboration + execution, leadership and storytelling. Specific examples include the following:

Collaboration + Execution: When preparing research feedback sessions, she proactively looped the team in early by sharing early discussion guides, provided feedback on designs/prototypes, and always welcomed comments and questions to ensure sessions ran smoothly. After research sessions, she worked quickly to prepare in-depth analyses for the team to leverage concrete, actionable insights from her in-depth work. This was especially critical in helping the team move forward for a key Marketplace project within the Messaging space.

Leadership + Storytelling: She supporting multiple teams and viewed as a leader and voice for the user. Her enthusiasm to speak both about pain points and package it in a tangible way to help teams moved forward at scale still continues to have impact today. She created rich presentations through data visuals, quotes, and excellent writing skills. These artifacts were especially impactful for quarterly planning and design sprints.”

— CYNDY ALFARO, PRODUCT DESIGNER @ META

“Nikita is a phenomenal UX researcher and would be an asset to any team. Her understanding of research design is remarkably strong, she executes her projects, and she effectively communicates with cross functional partners. Beyond that, she’s a fast and determined learner (she picked up SQL incredibly quickly) and a wonderful colleague.”